The Politics of Data: Data Commoning as a Territory of Transition

Access to and capability to generate insights from data is shaping the landscape of adaptive advantage, leading to greater power asymmetries. This essay explores some of the more obfuscated ways society is being shaped by the data-driven strategies of ‘big tech’ and in doing so reveals the potential role of data commoning as a territory of transition.

Thermodynamics, the branch of physics dealing with the properties and dynamics of energy, indicates something seemingly unnerving about our universe: that it tends toward disorder. Yet, despite this, something has been able to carve out tiny islands of ‘order’ in this vast ocean of increasing entropy: life. How and why life arose is an ongoing question inviting perspectives from across many religious and philosophical traditions, to more recent scientific endeavours from evolutionary biology to complexity science to, perhaps most recently, information theory. Arguably, while the real mystery of life – indeed, of why we exist and experience at all – is ultimately beyond the explanatory power of any theory, some are helpful in articulating some of the deeper operating principles of biology and of self-organising systems in general – including human society.

The pioneering work on ‘dissipative structures’ of the 19th-century chemist and Nobel laureate, Ilya Prigogine, puts forth a description of life as those self-organising systems capable of operating far from thermodynamic equilibrium. They achieve this, in part, by encoding information about (i.e. modelling) their environments. For example, light-sensitive proteins (a kind of ‘proto eye’) on the surface of many single-celled organisms indicates the direction of a light source, and thus the direction in which to ‘swim’ to aid photosynthesis. For social organisms like honeybees, a ‘waggle dance’ performed by a returning worker bee, along with the chemical signature of the flower, tells the hive where and how far to travel to find a particular flower patch. This capacity for organisms to encode environmental information appears essential for all life. Indeed, it is a core operating principle for self-organising systems which must continually learn and adapt within a universe of increasing entropy.

This capacity for organisms to encode environmental information appears essential for all life. Indeed, it is a core operating principle for self-organising systems which must continually learn and adapt within a universe of increasing entropy.

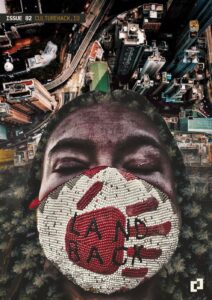

The centrality of information processing in the ongoings of life – from single-celled amoebas to complex, social organisms like humans – offers an important perspective into both the potential and political implications of data in territories of transition. Put simply, if data can be regarded as the ‘raw ingredients’ by which society makes sense of the world, then it is critical to ensure equitable access to and capabilities for data-driven decision-making. As we will see, today these capabilities are far from evenly distributed. For the most part, they remain concentrated among well-resourced organisations led and funded by a technocratic and capital elite: an amalgamation known as ‘big tech’. The remainder of this essay explores some of the more obfuscated ways society is being shaped by the data-driven strategies of ‘big tech’ and in doing so reveals opportunities for resisting them, as well as for transforming our shared information systems under a commons-centric vision.

Data is ultimately substrate-independent: in addition to pixels on a screen, this sentence could be represented as markings on a stone slab or in the vibrations of air when I speak.

Human society is not exempt from the laws of thermodynamics. As a self-organising system, society must, too, learn to sense and respond to environmental signals. ‘Data’, a term describing discrete informational states, is essential to this process. While commonly encoded by computers today, data is ultimately substrate-independent: in addition to pixels on a screen, this sentence could be represented as markings on a stone slab or in the vibrations of air when I speak. Neither is it a human construct. Patterns in nature reveal themselves to organisms who sense, encode and process these signals as data. Today, digital computing could be regarded as a kind of extended phenotype capable of externalising this process for human beings; ultimately making the encoding, storage and processing of data pervasive. We use geophysical data to better predict changing climate patterns and genomic data to design better disease-prevention strategies. If you live in a city with public transportation services, it’s likely that data is being used to increase energy efficiency and improve scheduling. Despite its universality, however, data is also being used in increasingly sophisticated ways by actors who may not necessarily have your or society’s best interests at heart.

Patterns in nature reveal themselves to organisms who sense, encode and process these signals as data. Today, digital computing could be regarded as a kind of extended phenotype capable of externalising this process for human beings

If being able to encode environmental information confers an evolutionary advantage in the natural world, then it follows that those who have greater access to and capabilities for information processing will be better off than their information-scarce counterparts. Such power asymmetries are already present and playing out today. Take, for example, the rise of the three largest internet companies: Facebook, Google and Amazon who, together, have a combined market cap of over ~$3.4 trillion, larger than the GDPs of many countries. Their power is due, in no small part, to their ability to leverage data.

The internet today looks very different from the one envisioned by the early hackers and left-leaning activists who saw it as an opportunity to develop a space of disintermediated power, equality and creative expression.

The internet is, at its basic level, simply a way of speaking: a set of protocols (procedures governing interaction) allowing computers to speak and to be understood by one another. Provided one has access to an internet-enabled device, participation in the network at this level is radically open. Furthermore, it is a positive-sum game: rather than being a burden, new participants increase the potential value and utility of the network. Arguably, it is these affordances that enabled the internet to grow to encompass a vast number of people, services and information. It is, by many accounts, an unprecedented digital commons, and has led to profound changes in human society. Unfortunately, the internet today looks very different from the one envisioned by the early hackers and left-leaning activists who saw it as an opportunity to develop a space of disintermediated power, equality and creative expression. In some corners this vision is still alive and well, but in the past couple of decades, the majority of activity has been taking place inside the networked walls of a few dominant players. The interiors of these walls are vast and all-encompassing, offering the illusion of unmediated, peer-peer interaction. As will become clear, these players understand the power of data more than any else.

If you spend time online today it’s likely that data about your activity is being recorded. Depending on the application or website, this may take the form of relatively harmless ‘cookies’ to more sophisticated tracking of your attention and behaviour. In some cases, the data from the latter may be sent to distant servers where it is used among potentially hundreds of thousands of other ‘sessions’ to train sophisticated AI models designed to do one thing better than anything else: predict your behaviour. For search engines and social network platforms whose business models consist in selling user attention to advertisers, or for online marketplaces that earn fees orchestrating the trading of goods and services, strong prediction models generally result in increased earnings. By leveraging insights from these models, platforms are able to significantly improve the probability that users will engage with products and interact with advertisers. The metric capturing the success of these interactions (i.e. a click or a purchase) is referred to internally as the ‘conversion ratio’.

While those who have come of age during the rise of social media may have normalised this kind of application of their personal data – have, perhaps, come to accept it as an inevitable trade-off of being able to access ‘free’ services – others may rightly feel concerned that the integrity of their choice-making is at risk. At what point does influence become a matter of psychological manipulation and/or control? One key consideration is information asymmetry: who has more information about the other?

Information asymmetry enables undue influence.

Machine learning systems – a popular artificial intelligence technique inspired by the neural networks of biological brains – generally require large datasets for training. Furthermore, these systems are often designed to improve over time as data about their own successes and failures are fed back into their training datasets. A learning feedback loop emerges, improving their capacity to predict and ultimately steer behaviour. In other words, these are sophisticated systems trained on enormous volumes of personal data – applied in a largely obfuscated way via the subtle orchestrating of digital environments to influence the behaviour of human beings. This information (or power) asymmetry is a clear indication of what’s referred to in legal contexts as ‘undue influence’: excessive persuasion that deprives a person’s capacity for choice.

While we might believe ourselves to be free agents, associating with and interacting within relatively neutral online spaces, it may be more appropriate to say that we are sitting in the living rooms of Mark Zuckerberg and Jeff Bezos. Only each time we visit, the furniture and decorations are changed to our personal liking. We may be persuaded to indulge in the plentiful snacks and imagery hung upon the walls. A smart speaker beckons for your command. Even your friends are here. By all appearances, it is a space of our choosing. Yet something is amiss. You understand that the eyes peering back at you from behind the hanging pictures are not the reflection of your own. The speaker, too, listens to more than just your isolated command. These eyes and ears, never tiring to notice every gesture, hesitation and intrigue.

In one sense, we are participants in a mass psychosocial experiment informed by the latest research at the intersection of artificial intelligence, big data and human psychology. Like many research endeavours, the aim is to better understand the human being. However, these new data-driven experiments are primarily motivated by a desire – not to expand the frontiers of human knowledge – but to increase corporate earnings. Behind the invitation to ‘make the world more open and connected’ is the desire to expand the reaches of corporate surveillance capitalism, with its impetus to connect every person under the shining banner of digital convenience.

However, these new data-driven experiments are primarily motivated by a desire – not to expand the frontiers of human knowledge – but to increase corporate earnings.

Brett Scott says it best in his recent book, Cloudmoney: an illuminating dive into the fusion of finance and big tech:

“Max Weber used the term ‘the iron cage of bureaucracy’ to refer to the [older leviathans], alluding to the old government buildings with their drab offices, and the industrial companies with their mountains of filing cabinets. The twenty-first-century leviathans, though, want to transform the bars of the iron cage into a fine transnational digital mesh. This is what we are currently experiencing in the creep of automated surveillance capitalism, which is given different names depending on where it creeps: in cities it is called ‘smart cities’, in our homes ‘smart homes’, in our bodies ‘self-tracking’, and in developing countries, ‘digital inclusion’.”

Beyond the privacy-infringing and consumerist implications of this data-driven ‘digital mesh’, big tech is also shaping society in subtler ways: our means of knowledge discovery. Estimating the true size of the internet is notoriously difficult due to its scale and networked nature. While in all likelihood orders of magnitudes larger today, an estimate in 2014 placed the figure at around 1×10²⁴ bytes (a number corresponding to estimates for the number of stars in the observable universe). To locate something in this vast ocean of data without knowing its precise location requires sophisticated indexing strategies and a lot of computational capacity. This is what modern search engines are designed to do. We may liken them to a map: a tool to help guide us through unfamiliar territory. Similarly to how different maps might use colours and weights to emphasise particular transportation modalities or scale to emphasize certain landmarks and geological features – search engines organise and represent information in various ways. As with all technology, these strategies are designed according to the values, ontologies and orienting principles of their designers. For information systems such as search engines, these choices can have profound effects on how society makes sense of and orients itself in the world.

Unsurprisingly, informing the design choices behind the most popular search engines today are the considerations of any 21st-century corporation. Consequently, company-related products and services and paid placements such as advertisements are frequently prioritised over more ‘organic’ search results. This alone raises concerns about the integrity of knowledge discovery online – particularly when such content is made to closely resemble more ‘organic’ results. However, there are other choices whose effects on knowledge discovery we may only speculate on. Unsurprisingly, data is critical to the implementation of these designs.

A search engine must necessarily employ ways to ‘reduce’ the totality of information available online to a more digestible size. Like maps, we trust that they do not impose any particular destination. Yet even this is becoming less of a certainty. As any seasoned internet voyager will know, engaging a search engine in the pursuit of new knowledge is typically our first port of call. Sometimes we know precisely what we’re looking for. Other times, we might be in an exploratory mood – content to stumble haphazardly through the many hyperlinks laid down by others before us. In one sense, we are like interstellar travellers exploring innumerable star systems and galaxies: the totality of recorded, human knowledge. If we’re fortunate we gain new perspectives and insights and return home with a deeper understanding of the cosmos. The search engine is our onboard navigational tool, helping us to identify interesting planetary systems and to avoid micro-meteors and black holes. Yet this explorative process of knowledge discovery is at risk of being circumvented by so-called ‘direct answers’. First introduced by Google in 2016, direct answers – what Google calls ‘featured snippets’ – are a way of discovering information in a quicker and more convenient manner than the traditional page list.

The search engine is our onboard navigational tool, helping us to identify interesting planetary systems and to avoid micro-meteors and black holes. Yet this explorative process of knowledge discovery is at risk of being circumvented by so-called ‘direct answers’.

On the one hand, ‘direct answers’ are convenient when looking for specific answers such as “how tall is Mt. Kilimanjaro?” or “what’s the largest animal on Earth?” – answers that may not typically incite much controversy. Fortunately, though, the world is more complex than such questions alone would have it appear. Enquiring into more contested subjects such as the safety and efficacy of a vaccine, the events of a specific historical conflict, or of geo-political boundaries, reveals a messier political reality beneath. Beyond the more obvious possibility of false or misleading answers, how does a search engine know if a subject is contested and whose perspective should it draw from? If the source of information is being algorithmically derived, we must ask on what basis they are chosen. Complicating all of this is the knowledge that people tend to place high trust in the accuracy of ‘direct answers’, as this study found in relation to medical-related queries.

In practice, of course, these systems could be designed to limit bias and improve the representation of more marginalised perspectives, for example. But how and by whom? Distrust in the usual purveyors of such systems is warranted when we consider the operating logics at work. Pure intentions risk being subsumed by contradictory aspirations. Furthermore, questions concerning sources of truth take us beyond technical discussions on ‘system proficiency’ and the universal applicability of Turing machines. Ultimately, they touch upon the nature of subjectivity, knowledge and ethics – indeed, of the experience of being human. At some point, we will also have to decide whether it is a good idea to let our thinking machines navigate such territory on our behalf.

Imagine that you are travelling in a foreign city. After arriving and settling in at your place of rest you set out to explore the bustling metropolis. You know the name and general location of one of the last remaining indie bookstores in the city, though it is some distance away. In this imagined techno-capitalist future, public transportation and human-operated vehicles have been almost entirely replaced with silent fleets of private ‘robo-taxis’. You consider your options for getting to your destination. Either, you hail a robo-taxi and request that it drops you directly outside the bookstore, or you walk. The first is quick and convenient: assuming you entered the correct address, the vehicle takes the most direct route. Walking takes longer and, since the area is unfamiliar to you, potentially marked by meandering paths and the occasional wrong turn. But in walking you are also able to sense into the many dimensions of the city; its various sights, sounds and scents. You may begin to internalise the patterned landscape as a patchwork quilt of distinctive neighbourhoods, woven together by the many roads that wind their way over and around buildings, trees and rivers. Travelling in this way allows you to locate yourself in the city: as a person amongst many thousands themselves going about their daily activities. Wrong turns may lead to discovery: of parks, historical monuments and local markets; people and places to reconnect with again, perhaps. Ultimately, if you have the time to spare and are in an explorative mood, it is a much more rewarding way to travel.

In this metaphor, the robo-taxi represents the ‘direct answer’: a request to find a specific piece of information amongst a rich landscape of possibilities. You are reliant on the opaque workings of an algorithmic mind to navigate you there accurately and safely. You might view the private robo-taxi as playing the role of a search engine, offering a useful and convenient service for navigation. On the other hand, walking represents the process of organic knowledge discovery: one that embraces the unknown, engages our intuition, and ultimately invites us to become more intimate with our surroundings. It is an iterative process of sensing, responding and synthesising. We are required to trust in an ancient, evolutionary capacity to navigate the unknown, to recognise patterns and to form new connections. While both are valid processes of knowledge discovery, the former involves an outsourcing of knowledge synthesis to an external agent. The latter kind – the organic process of discovery – is a necessary and arguably more gratifying mode of sense-making in a complex world.

I offer the travelling metaphor as a backdrop in which to consider how seemingly legitimate and useful designs (e.g ‘direct answers’) can give rise to unforeseen externalities. As with all socio-technical systems, they are deeply entangled – making the impact of new developments difficult to predict. This is a particularly important consideration in the development of informational/knowledge systems since they underpin the basis by which society makes informed choices. In his essay The manifesto of ontological design, designer and theorist Daniel Frago offers a lucid discussion on the necessity of ‘ontological design’, a theory and praxis ultimately concerned with the design of the human being. Perhaps more specifically, of perceptual experiences. While all design is ontological (i.e in existing it has an effect), Frago suggests that the subject of OD is really the post-human. In other words, what we are really designing are the ‘processes of human becoming’. Ontological design thus invites us to acknowledge our inseparable relationship with technology and to become intentional stewards of our own evolution. Recalling this essay’s introduction on the centrality of information processing in evolutionary systems, it may be clear that nothing could be more implicated in this process of human becoming than information itself. Could our information systems be designed to support collective sense-making and shared understanding (over mis/dis-information, polarisation and hate speech)? How do we ensure that our information ecologies include marginalised voices rather than merely those leveraging social and/or financial capital? Can we achieve balanced perspectives in contested domains, while remaining critical and upholding a commitment to Truth? These are the kind of questions pursued by ontological design.

Ontological design thus invites us to acknowledge our inseparable relationship with technology and to become intentional stewards of our own evolution.

One can appreciate the technical prowess of modern search engines. They are the portals by which we navigate a vast information network, and it’s the reason Google.com is the most visited website in the world (YouTube.com – effectively a search engine for video content, and owned by Google’s holding company, Alphabet – is the second most visited). Yet they offer the illusion of unbiased knowledge discovery when almost everything is mediated by algorithms whose inner workings remain largely opaque to the general public and to potential regulators. We observe a similar opaqueness in data usage and algorithms orchestrating social media platforms and online marketplaces. Films like the Social Dilemma brought the discussion into mainstream consciousness, creating awareness around the psychosocial ills created by social media platforms. Such is the result of designs oriented according to the values and operating logics of technologists and designers who are themselves deeply embedded in and dependent upon an economic system of unending growth. These logics underpin and reinforce the accumulating tendencies of a system that began and continues with the extraction of natural resources and labour and which has now, in modern times, led to the harvesting and sculpting of our very psyches. Unfortunately, this is the context into which ‘data’ – and the associated systems for collection, organisation, and interpretation – currently finds itself. However, it is not a given. Looking deeper into how these dynamics play out online today orients us to the possibility of cultivating new practices and designing systems capable of resisting extractivist logics.

A data commons can be articulated as a living socio-technical system – owned and stewarded collectively – designed to support the collection, storage and processing of data in a way that addresses society’s needs.

Aligning information-based practices and knowledge systems around data commoning articulates a way out of these logics and into new ones characterised by positive-sum dynamics, information abundance, and deep relationality. A data commons can be articulated as a living socio-technical system – owned and stewarded collectively – designed to support the collection, storage and processing of data in a way that addresses society’s needs. Following authors David Bollier and Silke Helfrich in their classic text, Free, Fair & Alive, Commons are ‘living social systems’ and may be more suitably described in terms of the verb, ‘commoning’. Speaking of data commoning reveals its cybernetic nature: a kind of ongoing conversation among humans, the biosphere and our technologies. Without a commons-centric ontology to guide us, data-based practices will remain limited in their transformative potential.

Aligning information-based practices and knowledge systems around data commoning articulates a way out of these logics and into new ones characterised by positive-sum dynamics, information abundance, and deep relationality.

Transitioning from ‘big data’ to what MIT theorist Alex Pentland calls ‘shared data’ would improve the flow and diversity of information between societal actors, thus improving collective sense-making. Removing barriers to access – namely financial and literacy barriers – could help communities and grassroots organisations benefit from data-driven decision-making. Democratising data generation and increasing the visibility of data provenance can help align society toward truth-seeking and shared understanding. Improving mechanisms for voluntary data collection and analysis means that existing organisations and communities already aligned and intimate with their social context can leverage data-driven insights toward addressing shared goals. The social impact of a data commons is thus intimately tied to the actions of communities solving problems together.

On the one hand, data commoning invites us to dissolve existing data silos and to develop policies which support data sovereignty and privacy-preserving practices. On the other hand, it entails designing and weaving together new systems, methodologies and practices able to resist existing tendencies toward centralisation and exploitation. Computational techniques such as secure multi-party computation and P2P application architectures such as Holochain are assistive technologies in this regard. While these kinds of technologies may be required in the development of scalable, robust and equitable data commons, the challenge is not merely technological. Cultural shifts are also needed. Commons-aligned actors attempting to subvert, reorient and ultimately live outside the embracing logics of Neoliberal capitalism ought to recognise the power (and thus political nature) of data and its potential as a territory of transition. Activists, grassroots organisations and communities working to leverage data-driven insights and to align data practices around the principles of the commons may discover a renewed sense of alignment and improved coordinative capacities. This is the context into which data must live and be stewarded.